Edge-Device LLM Finetuning

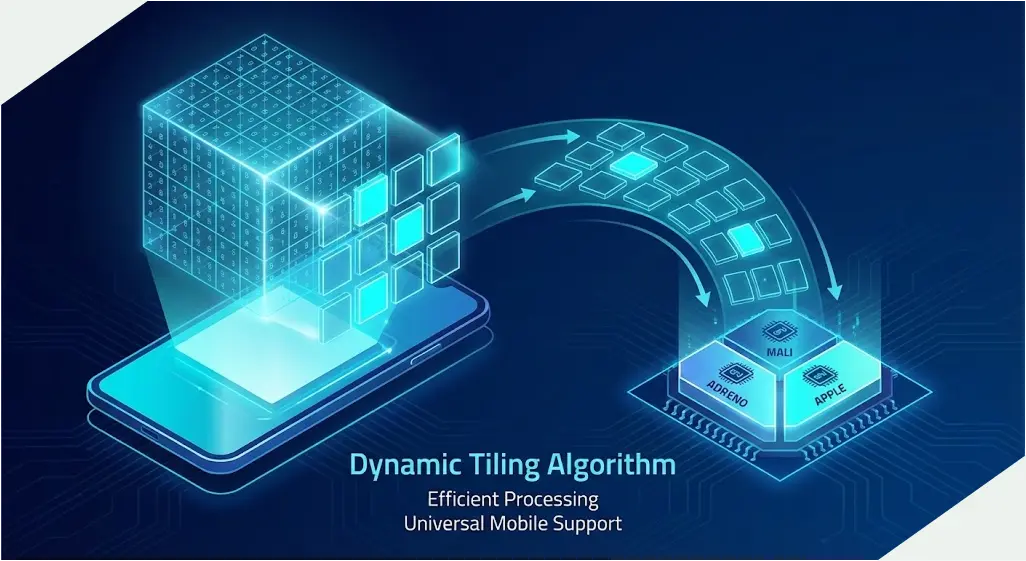

Our edge-first framework transforms any consumer device into a capable fine-tuning node. No central clouds, no massive data centers, no vendor dependency

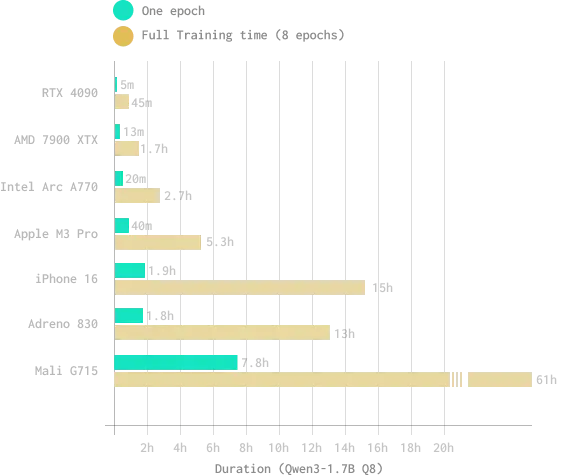

From Android smarphones to high-end workstations, our unified system allows LoRA fine-tuning directly in the llama.cpp ecosystem so you can initialize, train, checkpoint and merge adapters locally for maximum privacy and resilience.

// For Apple Silicon curl -L https://github.com/tetherto/qvac-fabric/releases/download/v1.0/qvac-macos-apple-silicon-v1.0.zip -o qvac-macos.zip unzip qvac-macos.zip cd qvac-macos-apple-silicon-v1.0 # Download model mkdir -p models wget https://huggingface.co/Qwen/Qwen3-1.7B-GGUF/resolve/main/qwen3-1_7b-q8_0.gguf -O models/qwen3-1.7b-q8_0.gguf # Download dataset wget https://raw.githubusercontent.com/tetherto/qvac-fabric/main/datasets/train.jsonl # Quick test with email style transfer ./bin/llama-finetune-lora -m models/qwen3-1.7b-q8_0.gguf -f train.jsonl -c 512 -b 128 -ub 128 -ngl 999 --lora-rank 16 --lora-alpha 32 --num-epochs 3

Start by using our pre-trained adapters

To accelerate development and innovation, we have publicly released Fine-tuned LoRA adapters trained using Fabric. These adapters work on any GPU and are available for Qwen3 and Gemma3 models.